By April Dobbins

Shalini Kantayya’s illuminating tech-documentary, Coded Bias, follows Joy Buolamwini, an artificial intelligence (A.I.) researcher who makes it her mission to fight algorithmic bias. We learn early on in the film that facial recognition software often misidentifies the faces of women and People of Color. Buolamwini notes that the inaccuracies in facial recognition programs might be humorous when we find ourselves misidentified in social media photos, but they pose a more serious threat when used by authorities as justification to detain or search citizens. With Kantayya at the helm and Buolamwini as protagonist, Coded Bias makes for a compellingly watchable deep dive into present-day privacy concerns in our digital world.

In 1998, MIT created Kismet, one of the first robots able to perceive social cues and display emotions based on its interactions with humans. At the time, Buolamwini was just a child, but she was so awed by Kismet that she set her sights on MIT. Years later, Buolamwini was accepted to her dream school as a graduate student at the MIT Media Lab, one of the world’s leading research and academic organizations.

When we meet her, she is a Ph.D. candidate. The film captures Buolamwini on MIT’s picturesque campus in Cambridge, Massachusetts, as she recounts what led her to explore this particular sector of technology. While enrolled in a science fabrication course where students were asked to build something—a machine, a piece of technology—inspired by literature, Buolamwini decided that she would build an “Aspire Mirror.” This mirror would use augmented reality to project other faces onto her reflection in the morning for inspiration. For example, she might see Serena Williams superimposed on her reflected face giving her a motivational pep talk before starting the day.

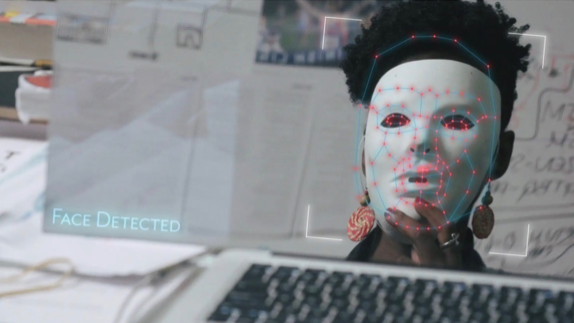

Excited to get her project underway, Buolamwini ordered basic facial recognition software, but there was one big problem: the software could not recognize her face. At first, she thought it might be the lighting or a number of other factors, but after testing it on friends under similar conditions, Buolamwini, a Black woman, suspected it might be her darker skin. Testing the theory, she put a featureless white mask over her face and, disturbingly, the software recognized the white face immediately.

According to Buolamwini, this wasn’t the first time that this had happened to her. As an undergraduate student at Georgia Institute of Technology, she worked with social robots. In one class project, she had to get a robot to play “Peek-a-Boo” with her. However, the robot could not see Buolamwini’s face. She ended up “borrowing” her roommate’s non-Black face to complete the project.

Later, on a trip to China for an entrepreneurship competition, Buolamwini and peers visited a local start-up that worked with social robots. During the demonstration, the robot could recognize everyone’s face but hers. Buolamwini assumed that researchers would surely fix the problem, but by the time she got to graduate school, she realized that no one knew that A.I. even had a problem. At the time, a common refrain for people defending machine results was that it was impossible for A.I. to be biased.

What we learn from Buolamwini and the other stellar women scientists in Coded Bias is that the issues in A.I. are baked in by its creators. To condition machines, creators must feed them data sets. If machines are fed mostly white faces, then they have problems identifying Black faces as “faces” when they appear. Most of the designers and founders of A.I. are white men whose data sets are not reflective of the diversity in the world. When tested, these machines have a much higher accuracy rate when identifying white men.

“Machine learning is being used for facial recognition, but it’s also extending beyond the realm of computer vision,” Buolamwini says in her Ted Talk. “Law enforcement is also starting to use machine learning for predictive policing. Some judges use machine-generated risk scores to determine how long an individual is going to spend in prison. So, we really have to think about these decisions. Are they fair?”

For Buolamwini, the problems in A.I. aren’t that the tech isn’t perfect, but that people and organizations do not question the technology. Machine verdicts are accepted as truth without real accountability or recourse for those harmed. Machine scores are used for loans, college admissions, job hiring, promotions and terminations.

Kantayya turns her focus to Europe and Asia to call attention to A.I.’s global reach and international influence. In the United Kingdom, we’re introduced to Big Brother Watch, an organization monitoring police use of facial recognition technology in high-traffic areas. Police vans with facial recognition software scan pedestrian faces on a public street for criminal hits. If the system alerts them, they then detain and question the pedestrians.

In one instance, we witness police detaining a Black school child after the system misidentifies him as a criminal. Still in uniform, the boy is obviously scared, and the police corner him to question him without the presence of an adult. In another instance, a man covers his face to avoid scanning. Police use this as cause to detain the man even though he has every right to refuse a scan. In each of these instances, a representative from Big Brother Watch intervenes to provide support to those wrongfully detained.

Coded Bias uses China as an example of how authoritarian societies use A.I. to monitor and control their citizens. Surveillance in China is open and omnipresent—face scans and ID cards are necessary at vending machines and subway turnstiles, but the extent of surveillance in the United States is less clear, and often, the motive for use in the U.S. is commercial. As one scientist notes, in a capitalistic model, the rich are targeted for luxury items. Everyone wants those items and aspires to them.

Poor people are targeted by predatory industries such as payday loans, casinos and for-profit colleges. Even more disturbing, A.I. is often tested first on poor communities and communities of color without regard for accuracy or honoring the rights of those communities. Hence, those with the least support are the most frequently victimized.

As a result of her findings, Buolamwini started the Algorithmic Justice League, “an organization that combines art and research to illuminate the social implications and harms of artificial intelligence.” She also presents her research as part of her testimony for the United States House Committee on Oversight and Reform. Buolamwini took large corporations like Amazon, Microsoft and IBM to task over facial recognition technology.

In the film, Buolamwini grapples with the aftermath of Jeff Bezos publicly questioning and then dismissing her research, after which she leans on her network of scientists and researchers, most of whom are women of color. Buolamwini notes that she developed thick skin out of necessity because even the most unqualified people will come for you—even if they have no data to back up their claims—if you are a Black woman in science. In a surprising turn, Amazon recently implemented a one-year moratorium on police use of its Rekognition facial recognition technology. IBM and Microsoft also made adjustments to their facial recognition plans.

Coded Bias deftly presents scientific information in a way that any layperson can understand. Buolamwini is thoughtful and engaging as she explains her work. While the subject has the potential to be bleak, Buolamwini’s optimism and activism are encouraging. The film premiered earlier this year at Sundance and has since screened at BlackStar Film Festival and the American Black Film Festival, among others.

The South Miami-Dade Cultural Arts Center screened Coded Bias on Tuesday, Sept. 15, and Sunday, Sept. 20, as part of the Southern Circuit Tour of Independent Filmmakers. For more information on future screenings, visit www.facebook.com/codedbiasmovie/.

April Dobbins is a writer and filmmaker based in Miami. Her work has appeared in a number of publications, including the Miami New Times, Philadelphia City Paper, and Harvard University’s Transition magazine. Her films have been supported by the Sundance Institute, International Documentary Association, Firelight Media, ITVS, Fork Films, Oolite Arts, and the Southern Documentary Fund. She is a graduate student at Harvard University.